- #DOCKER CONTAINER LOGS HOW TO#

- #DOCKER CONTAINER LOGS UPDATE#

- #DOCKER CONTAINER LOGS SOFTWARE#

- #DOCKER CONTAINER LOGS CODE#

By default Logstash is listening to Filebeat on port 5044. I have configured it to the IP address of the server I’m running my ELK stack, but you can modify it if you are running Logstash on a separate server.

Note the line 21, the output.logstash field and the hosts field. Given below is a sample filebeat.yml file you can use.

#DOCKER CONTAINER LOGS UPDATE#

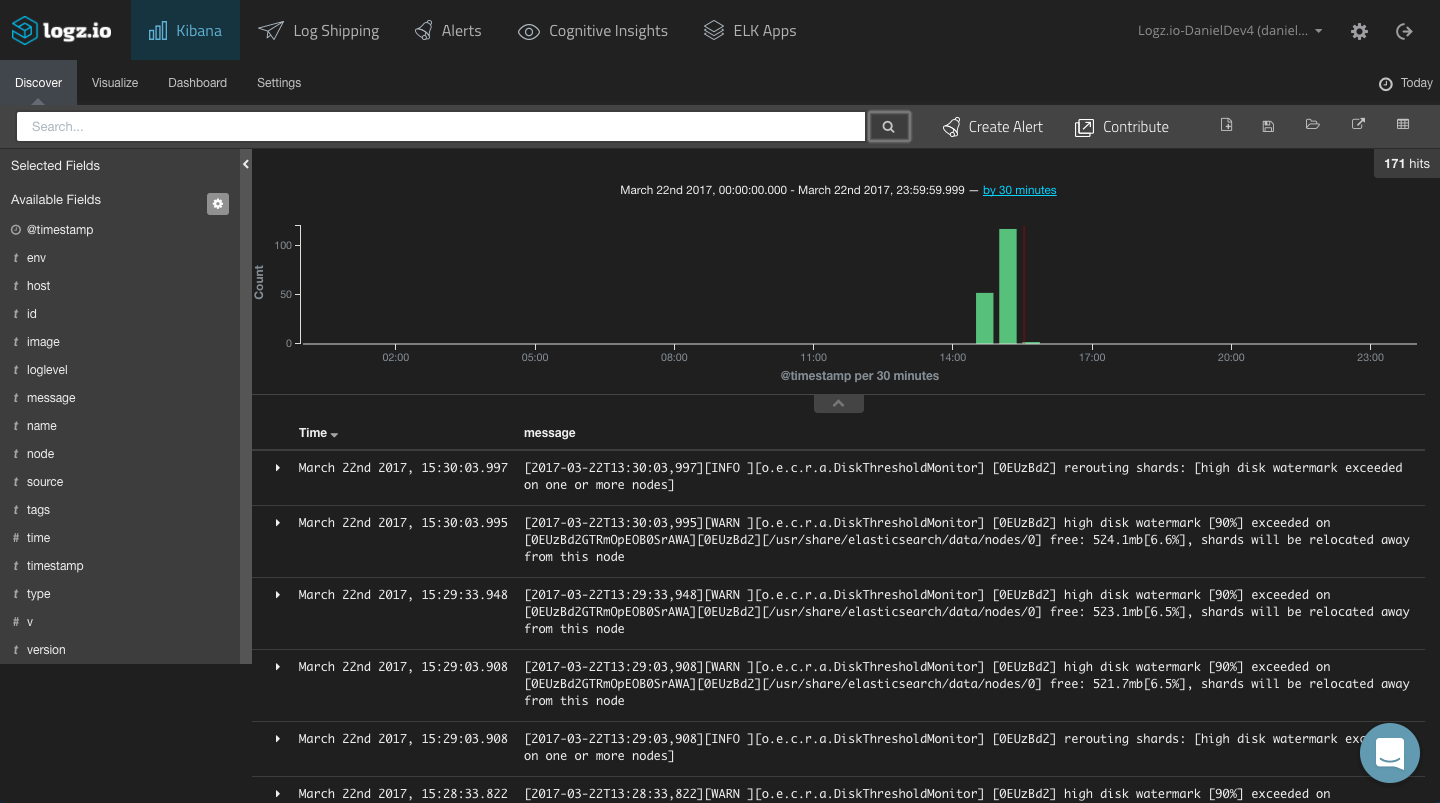

You can access the repo here.Īs the initial step, you need to update your filebeat.yml file which contains the Filebeat configurations. But since I have done several changes to filebeat.yml according to requirements of this article, I have hosted those with rvice (systemd file) separately on my own repo. This is often known as single responsibility principle.ĪDVERTISEMENT Configuring Filebeat For this section the filebeat.yml and Dockerfile were obtained from Bruno COSTE’s sample-filebeat-docker-logging github repo. High Level Architecture - Instance 1 | Instance 2 īy doing this kind of implementation the running containers don’t need to worry about the logging driver, how logs are collected and pushed. These data can also be sent easily by Filebeat along with the application log entries. Filebeat will then extract logs from that location and push them towards Logstash.Īnother important thing to note is that other than application generated logs, we also need metadata associated with the containers, such as container name, image, tags, host etc… This will allow us to specifically identify the exact host and container the logs are generating. Our tomcat webapp will write logs to the above location by using the default docker logging driver. Filebeat will be installed on each docker host machine (we will be using a custom Filebeat docker file and systemd unit for this which will be explained in the Configuring Filebeat section.)

var/lib/docker/containers//In Linux by default docker logs can be found in this location: Instance 1 is running a tomcat webapp and the instance 2 is running ELK stack (Elasticsearch, Logstash, Kibana).

#DOCKER CONTAINER LOGS HOW TO#

In this post, we will look into how to use the above mentioned components and implement a centralized log analyzer to collect and extract logs from Docker containers.įor the purposes of this article, I have used two t2.small AWS EC2 instances, running Ubuntu 18.04 installed with Docker and Docker compose.

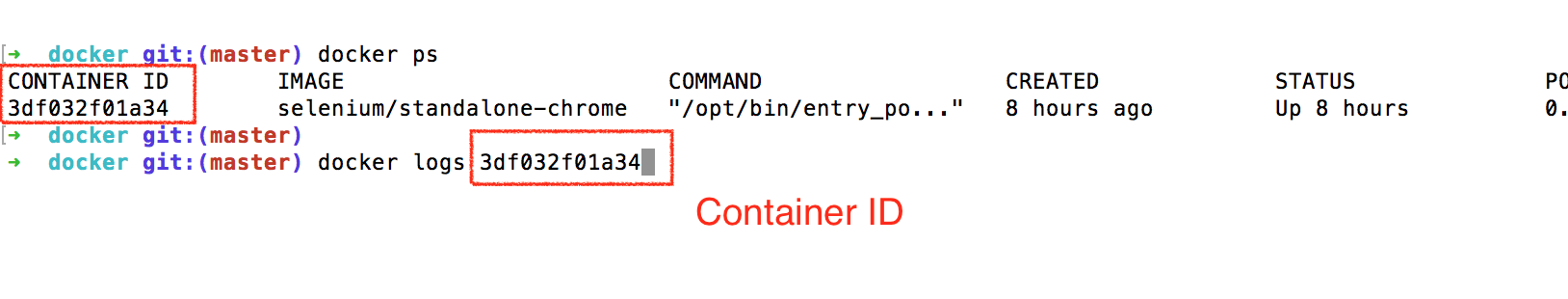

Why then think of Elastic Stack to analyze your logs? Well, there are mainly two burning problems here: If you have containerized your application with a container platform like Docker, you may be familiar with docker logs which allows you to see the logs created within your application running inside your docker container.

#DOCKER CONTAINER LOGS SOFTWARE#

Logging allows software developers to make this hectic process much easier and smoother. Software developers spend a large part of their day to day lives monitoring, troubleshooting and debugging applications, which can sometimes be a nightmare.

#DOCKER CONTAINER LOGS CODE#

Logs enable you to analyze and sneak a peak into what’s happening within your application code like a story.

Logging is an essential component within any application. By Ravindu Fernando How to simplify Docker container log analysis with Elastic Stack Background Image Courtesy - | Created via

0 kommentar(er)

0 kommentar(er)